Generative diffusion models have achieved remarkable progress in high-fidelity image generation. Still, challenges persist in efficiently customizing these models for generating images that incorporate multiple distinct concepts without extensive retraining. This paper presents a novel zero-shot multi-concept customization approach for diffusion models, integrating SDXL, InstantID, and Grounding Dino to enable accurate personalization based on textual descriptions and reference images. Our method utilizes SDXL for text-guided generation, InstantID for identity preservation, and Grounding Dino for spatial mask generation. This approach allows a compelling fusion of latent representations to produce images that adhere to multiple distinct concepts, eliminating the need for extensive dataset accumulation and model retraining. Experimental results demonstrate that our method maintains high generative quality and robust adaptability to new concepts. This research advances the practical application of generative diffusion models, making sophisticated customization more accessible and efficient in real-world settings.

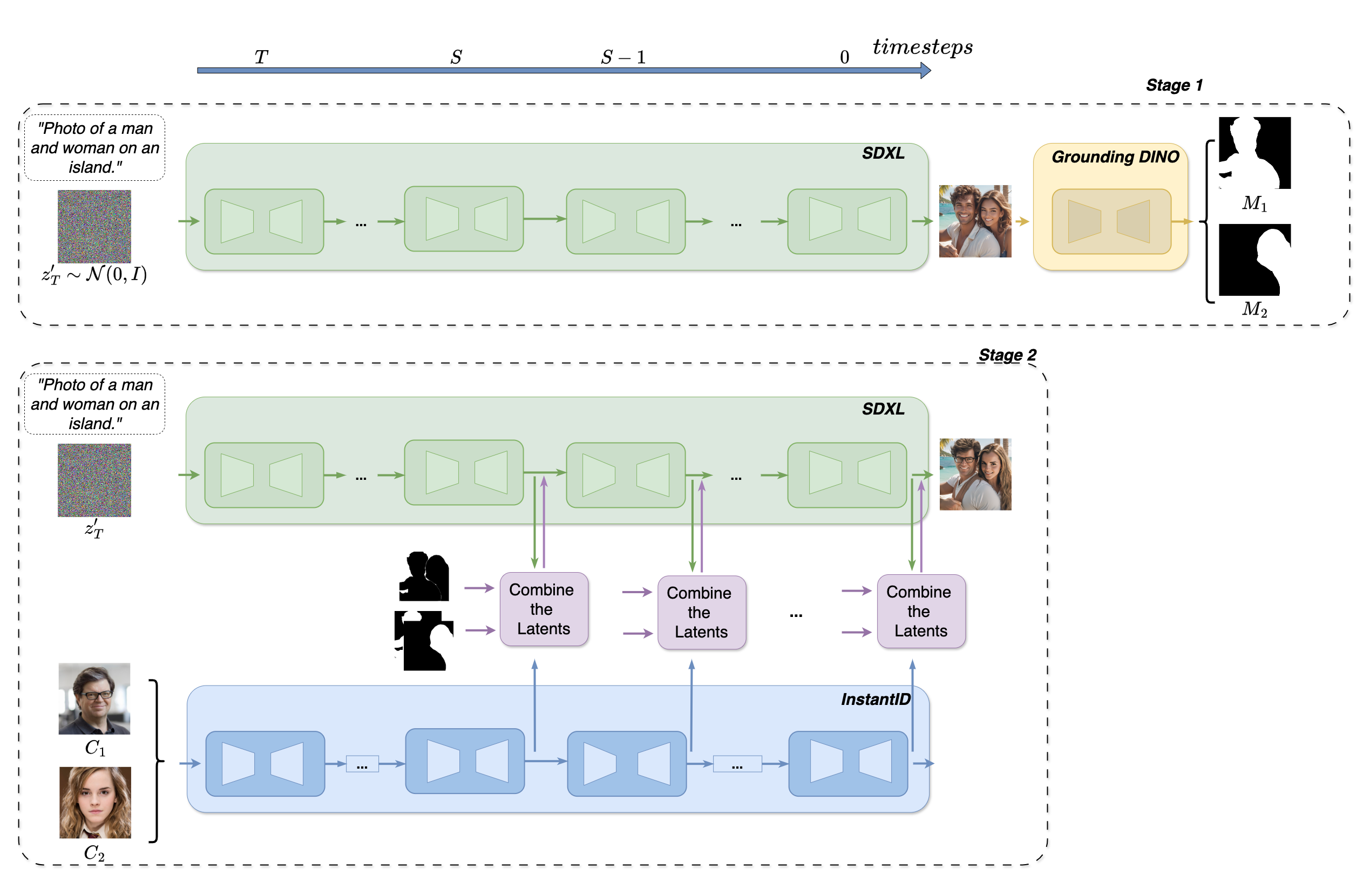

Our approach involves a two-stage framework for multi-concept customization. The first stage focuses on layout generation and visual comprehension information collection, while the second stage integrates multiple concepts using concept noise blending. This strategy ensures strong identity preservation and harmonious integration of concepts.

Our results demonstrate superior performance in both single-concept and multi-concept customization compared to existing methods. We achieve high identity preservation and harmonious integration of concepts without additional fine-tuning.

Our method (right) preserves identity better, enhances image quality, and achieves greater photorealism compared to FastComposer (left).

BibTex Code Here